Using Data Sync to Screen Scrape Data from Websites

Data Synchronisation Studio can be a very useful tool to extract structured data from a typically unstructured source such as the huge resource of the Internet. In this example we will show you how in about 10 minutes you can create a custom data sync data source that lists the Free Email Domain names from a List on this website http://www.joewein.de/sw/spam-freemailer.htm.

Step 1

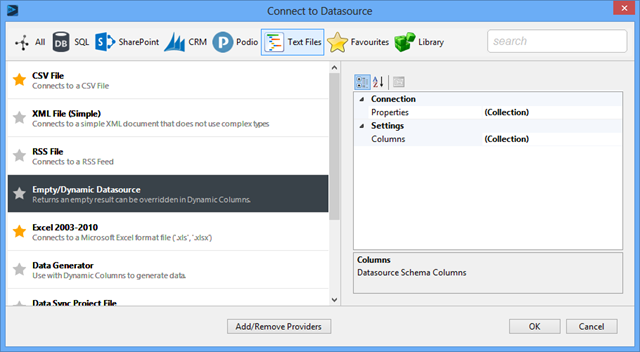

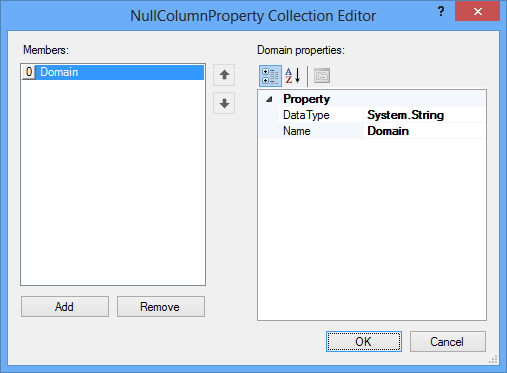

Define the Source Schema Using the Empty Data source Provider.

Create a single column in the Columns collection called “DomainName” this will return the Domain Name from the Website.

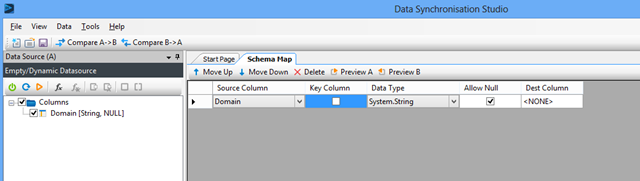

This results a very simple 1 column schema and currently returns no results.

Step 2 – Write the Code

Next we need to write the code that will override the row loading in Data Sync, download the webpage and extract all the domain names from the page.

Lucky for us this is actually a very trivial process.

- Create a List

to contain all the Domain Names - In the Setup() Method load and parse the Data.

- In the GetDataTable() method return the row data from the List

in Step 1.

The parse code below simply returns each line as a new domain name between the

tags in the web page.The GetDataTable() code is mainly boiler plate code the main part of interest is in the select statement where we match the requested “DomainName” column.

#region Usings using System; using System.Collections.Generic; using System.Data; using System.Globalization; using System.Text; using System.Linq; using Simego.DataSync; #endregion using Simego.DataSync.Providers.Null; /// <summary> /// This module of code allows you to define some columns in code or Dynamic Columns /// To Create new Dynamic Columns simply expose them as properties in this class. /// </summary> partial class DataSourceRowOverride : Simego.DataSync.DynamicColumns.DataSourceRowInternal //Do Not Change This Line { private List<string> domainList = new List<string>(); public override DataTableStore GetDataTable(DataTableStore dt, IDataSourceReader reader) { //Get the Actual Reader Instance. NullDataSourceReader SourceReader = (NullDataSourceReader)reader; //Create a Schema Mapping Helper DataSchemaMapping mapping = new DataSchemaMapping(reader.SchemaMap, reader.Side); for(int i=0; i<domainList.Count; i++) { var row = dt.NewRow(); foreach (DataSchemaItem item in reader.SchemaMap.GetIncludedColumns()) { string columnName = mapping.MapColumnToDestination(item); switch(columnName) { case "ID": { row[item.ColumnName] = DataSchemaTypeConverter.ConvertTo(i, item.DataType); break; } case "Domain": { row[item.ColumnName] = DataSchemaTypeConverter.ConvertTo(domainList[i], item.DataType); break; } default: { break; } } } if (dt.Rows.Add(row) == DataTableStore.ABORT) break; } return dt; } public override void Setup() { //Download the WebPage System.Net.WebClient webClient = new System.Net.WebClient(); string domainListContent = webClient.DownloadString("http://www.joewein.de/sw/spam-freemailer.htm"); if ( !string.IsNullOrEmpty(domainListContent)) { //Parse the WebPage for Data string line = string.Empty; bool start = false; using(var reader = new System.IO.StringReader(domainListContent)) { while((line = reader.ReadLine()) != null) { if ( !string.IsNullOrWhiteSpace(line)) { if ( line.Contains("<pre>") || line.Contains("</pre>")) { start = !start; continue; } if ( start ) { domainList.Add(line.Trim()); } } } } } } }

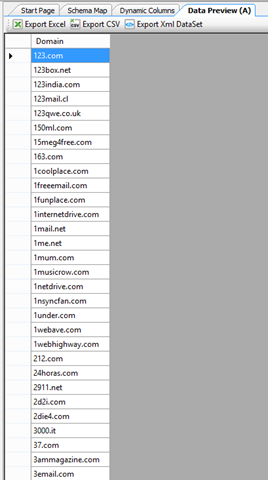

Then previewing the data you see a nice up to date list created dynamically from the web page.

As you can see from a little bit of upfront coding 1 hour maximum you can create an integration of systems that would otherwise take take somebody probably over 1 hour everyday to update.