Dynamics to SQL Data Export

In this guide we show how you can use Data Synchronisation Studio to build a Dynamics/Dataverse to SQL Server Data Export solution. Whilst only creating a single Data Sync project and using Project Automation to enable automatic creation of SQL Tables from the Dynamics schema.

You could also use this approach with other Datasources like Salesforce or SharePoint.

Overview

We will build a single Data Sync project which will automatically update itself based on the requested entity. If the schema of the entity changes we will drop the existing table and re-create it.

The steps required for this to work are as follows.

- Connect to Entity named in Project Property.

- Discover Dynamics Entity Schema.

- Create matching SQL Table in SQL Database from the Dynamics Schema.

- Check if the schema has changed and re-create the table if necessary.

- Setup Source and Target in the Data Sync project and update Schema Map to match.

Command Line

Once this project is configured you can call the sync for different entities right from the command line like this.

"%DATASYNCINSTALLPATH60%\Simego.DataSync.Run.exe" -e project.dsprj -p:entity=account

"%DATASYNCINSTALLPATH60%\Simego.DataSync.Run.exe" -e project.dsprj -p:entity=contact

Setup Project

First we need a SQL Table in our target database used to track which entities have been created and a hash value of the schema columns.

Use the script below to create your table for tracking the created entity tables.

CREATE TABLE [dbo].[_dsTableMetadata] ( [ID] int IDENTITY(1, 1) NOT NULL, [Name] nvarchar(255) NOT NULL, [Hash] nvarchar(100) NOT NULL, [Script] nvarchar(MAX) NULL, [Created] datetime DEFAULT(getutcdate()) NOT NULL, [Updated] datetime DEFAULT(getutcdate()) NOT NULL ) GO ALTER TABLE [dbo].[_dsTableMetadata] ADD CONSTRAINT [PK__dsTableMetadata_BCAF3B7C] PRIMARY KEY CLUSTERED ([ID] ASC) GO ALTER TABLE [dbo].[_dsTableMetadata] ADD CONSTRAINT [IX__dsTableMetadata_FDE2506A] UNIQUE NONCLUSTERED ([Name] ASC)

Next we need a DataSync Project configured with connection from the Connection Library for Dynamics and SQL. At this point it doesn't really matter about the Schema Map and if the project works its about configuring the source and target systems.

Start with a new Project and connect the source to the Dynamics 'account' entity from the Connection Library. Then connect the Target to a SQL Table from the Connection Library.

Under File->Properties->Properties add a new Project Property named 'entity' with a value 'account'.

Project Automation Code

This is the code which dynamically creates a SQL Table matching the source Data Schema and sets up the project and schema map to match.

These are the steps we need the code to complete.

- Setup the source and target to point to the Entity value stored in the Project Property

entity.

Then when the Schema Map is requested in the GetDataSchema override function.

- Get the source Schema.

- Check if the SQL Table already exists in the SQL Database or whether to re-create the Table.

- Converts the source schema into a SQL Table script.

- Create the SQL Table on the target SQL Server.

- Return the updated Schema Map.

Enable Project Automation on your project and replace the code with this version below.

using System; using System.Collections.Generic; using System.Data; using System.Globalization; using System.Linq; using System.Text; using Simego.DataSync; using Simego.DataSync.Engine; class ProjectAutomationOverride : Simego.DataSync.Automation.ProjectAutomationShim //Do Not Change This Line { public override void Start() { // Set the Entity on DataSource A DataSourceA.Entity = Properties["entity"]; // Set the Entity in DataSource B DataSourceB.SourceTable = $"[dbo].[{DataSourceA.Entity}]"; // AutoMap the Schema AutoSchemaMap = true; } public override DataSchema GetDataSchema(DataSchema schema) { var schemaA = DataSourceA.GetDefaultDataSchema(); // Get the Key columns for the PK. var keyColumns = string.Join(", ", schemaA.GetUniqueColumns().Select(p => $"[{p.ColumnName}]")); // Get the column list var columns = string.Join(",\r\n", schemaA.Map.Where(p => isValidColumn(p)).Select(column => $"\t[{column.ColumnName}] {getSqlColumnType(column)} {(column.AllowNull ? "NULL" : "NOT NULL")}")); // Generate a Hash of the columns to detect if any have changed. var columnHash = getHash(columns); // Check if the entity Table already exists in the DB. By getting the existing Hash from our _dsTableMetadata table. var existingHash = DataSourceB.ExecuteScalar($"SELECT Hash FROM _dsTableMetadata WHERE Name = '{DataSourceB.SourceTable}'") as string; if (existingHash == null) { // Create Table Script var tableScript = getTableScript(keyColumns, columns); // Create the Target Table DataSourceB.ExecuteNonQuery(tableScript); // Update the _dsTableMetadata Table DataSourceB.ExecuteNonQuery("INSERT INTO [dbo].[_dsTableMetadata] (Name, Hash, Script) VALUES (@p0, @p1, @p2)", DataSourceB.SourceTable, columnHash, tableScript); // Write to Log Trace.WriteLine($"Created Table '{DataSourceB.SourceTable}'"); } else if(existingHash != columnHash) { // Create Table Script var tableScript = getTableScript(keyColumns, columns); // Update the _dsTableMetadata Table DataSourceB.ExecuteNonQuery("UPDATE [dbo].[_dsTableMetadata] SET Hash=@p0, Script=@p1, Updated=getutcdate() WHERE Name=@p2", columnHash, tableScript, DataSourceB.SourceTable); // Drop the existing Table DataSourceB.ExecuteNonQuery($"DROP TABLE {DataSourceB.SourceTable}"); // Create the Target Table DataSourceB.ExecuteNonQuery(tableScript); // Write to Log Trace.WriteLine($"Updated Table '{DataSourceB.SourceTable}'"); } // Get the Target Schema var schemaB = DataSourceB.GetDefaultDataSchema(); // Return an Auto-Map return AutoSchemaMap ? schemaA.ToAutoMap(schemaB) : schema; } string getTableScript(string keyColumns, string columns) { return string.Concat( $"CREATE TABLE {DataSourceB.SourceTable} (\r\n", // Append the Columns columns, ")\r\n", // Add the Primary Key $"ALTER TABLE {DataSourceB.SourceTable} ADD CONSTRAINT [PK_dbo_{DataSourceA.Entity}] PRIMARY KEY CLUSTERED ({keyColumns})\r\n"); } bool isValidColumn(DataSchemaItem column) { // Use to Filter out invalid columns. return true; } string getSqlColumnType(DataSchemaItem column) { switch(column.DataType.FullName) { case "System.Guid": { return "uniqueidentifier"; } case "System.Int64": { return "bigint"; } case "System.Int16": case "System.Int32": { return "int"; } case "System.Single": case "System.Double": { return "float"; } case "System.Decimal": { return "decimal(19,4)"; } case "Simego.DataSync.Date": case "System.DateTime": { return "datetime"; } case "System.Boolean": { return "bit"; } case "System.String": { if(column.Length > 0 && column.Length < 4000) { return $"nvarchar({column.Length})"; } if(column.ColumnName.EndsWith("codename")) { return "nvarchar(100)"; } break; } } // Default Column value return "nvarchar(4000)"; } static string getHash(string input) { using (var sha1 = System.Security.Cryptography.SHA1.Create()) { // Convert hash bytes to hex string return string.Concat(sha1.ComputeHash(Encoding.UTF8.GetBytes(input)).Select(b => b.ToString("x2"))); } } }

You can adjust this code to work best for you its just a guide on the process you may want look at how the SQL Columns are defined and potentially implement some column filtering via the

isValidColumnfunction.

Test Project

You can now test this project by running in the designer you should see an 'account' table is created and there is a result in the 'Compare Results' window to write to the SQL Table.

Note: The Designer doesn't always update the UI when running dynamically like this only when re-opening the Data Sync project file.

Command Line

You can now run this on the command line to process each entity you want to sync.

"%DATASYNCINSTALLPATH60%\Simego.DataSync.Run.exe" -e project.dsprj -p:entity=account

"%DATASYNCINSTALLPATH60%\Simego.DataSync.Run.exe" -e project.dsprj -p:entity=contact

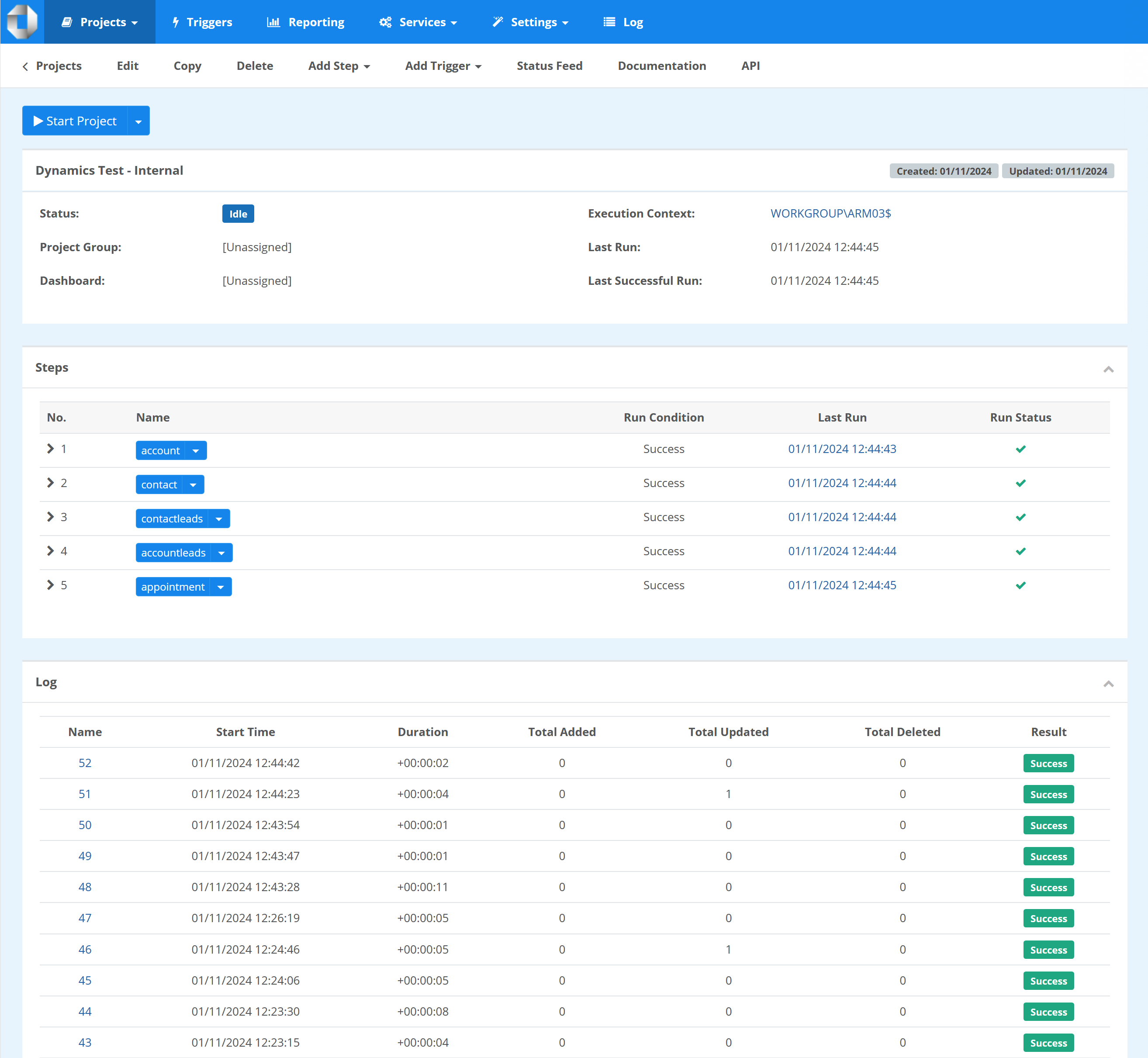

Ouvvi

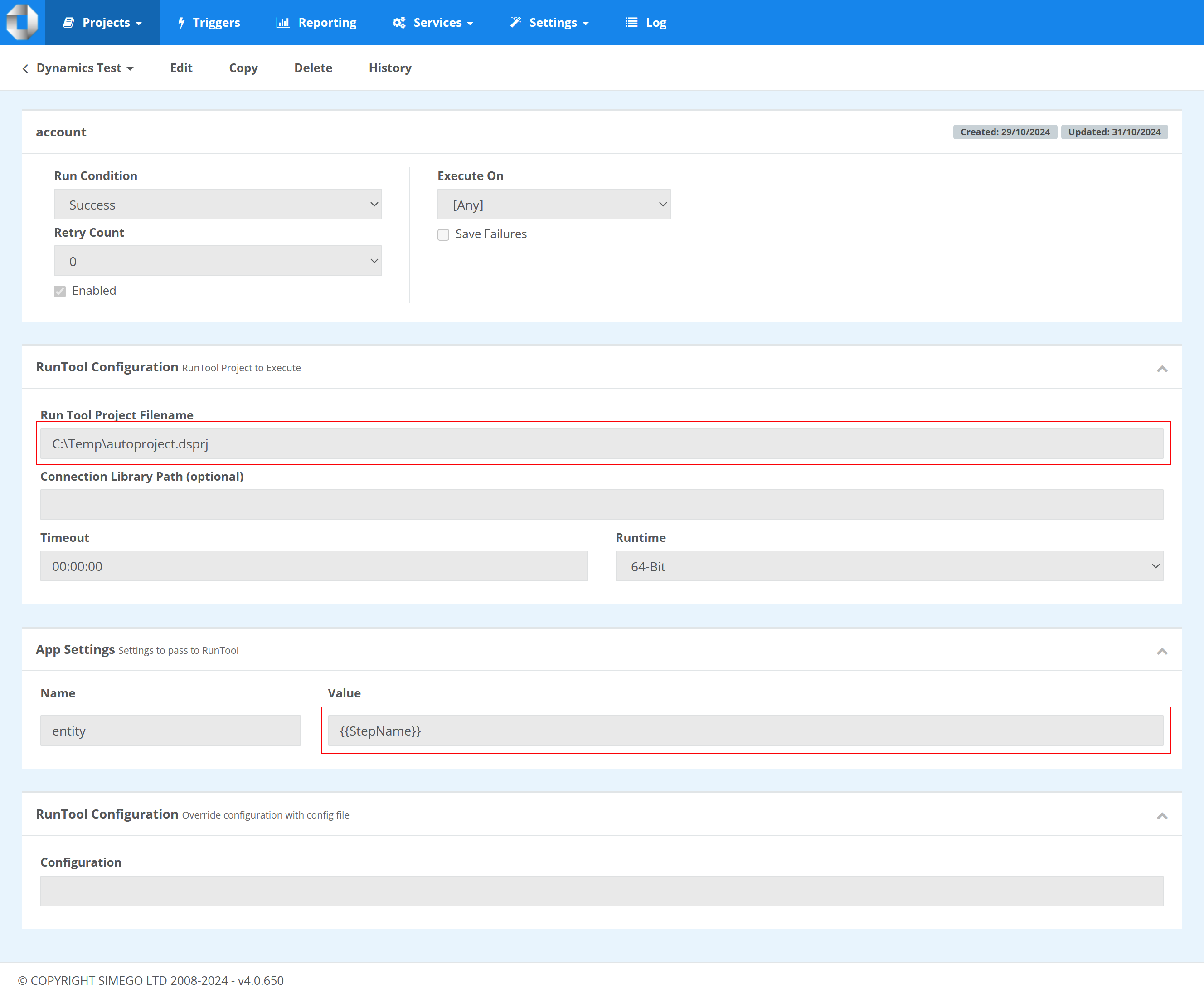

You can use the Run Tool Project step in Ouvvi to run these and pass the entity name in the App Settings.

By running these via the RunTool step means they are run in a new process which has a performance hit on loading the Dynamics Schema. We are building a new Step Handler for Ouvvi to allow you to run these steps In-Process from a single project file and pass the project property configuration.

The Ouvvi Step should be a RunTool Project Step where we can pass the Entity name via the App Settings. By using the value {{StepName}} we can inject the name of the Ouvvi Project Step as the Entity name.

Improving Performance - Incremental Sync

Requires an update to Data Sync Version 6.0.3388

We can improve the performance of the synchronisation process by using an incremental sync where possible and using a new Ouvvi Step handler to ensure the steps run In-Process with the Ouvvi Service.

To implement Incremental Sync we need to change the Data Sync Mode to return those records which were created or modified since the last sync.

Some Dynamics entities have a column modifiedon which we can use with a Fetch XML Filter to return only those changed records.

By changing our code to check for this column we can switch the sync mode in Project Automation and apply this filter at runtime. The code below includes the changes to switch to incremental mode and update the Fetch XML Filter at runtime to only return those changed records.

using System; using System.Collections.Generic; using System.Data; using System.Globalization; using System.Linq; using System.Text; using Simego.DataSync; using Simego.DataSync.Engine; class ProjectAutomationOverride : Simego.DataSync.Automation.ProjectAutomationShim //Do Not Change This Line { public override void Start() { // Set the Entity on DataSource A DataSourceA.Entity = Properties["entity"]; // Set the Entity in DataSource B DataSourceB.SourceTable = $"[dbo].[{DataSourceA.Entity}]"; // AutoMap the Schema AutoSchemaMap = true; } public override DataSchema GetDataSchema(DataSchema schema) { // Set the Sync Mode to Normal SyncMode = SyncModeEnum.SyncAtoB; // Reset the Fetch Expression DataSourceA.FetchXmlFilterExpression = null; // Load the Source Schema var schemaA = DataSourceA.GetDefaultDataSchema(); // Get the Key columns for the PK. var keyColumns = string.Join(", ", schemaA.GetUniqueColumns().Select(p => $"[{p.ColumnName}]")); // Get the column list var columns = string.Join(",\r\n", schemaA.Map.Where(p => isValidColumn(p)).Select(column => $"\t[{column.ColumnName}] {getSqlColumnType(column)} {(column.AllowNull ? "NULL" : "NOT NULL")}")); // Generate a Hash of the columns to detect if any have changed. var columnHash = getHash(columns); // Check if the entity Table already exists in the DB. By getting the existing Hash from our _dsTableMetadata table. var existingHash = DataSourceB.ExecuteScalar($"SELECT Hash FROM _dsTableMetadata WHERE Name = '{DataSourceB.SourceTable}'") as string; if (existingHash == null) { // Create Table Script var tableScript = getTableScript(keyColumns, columns); // Create the Target Table DataSourceB.ExecuteNonQuery(tableScript); // Update the _dsTableMetadata Table DataSourceB.ExecuteNonQuery("INSERT INTO [dbo].[_dsTableMetadata] (Name, Hash, Script) VALUES (@p0, @p1, @p2)", DataSourceB.SourceTable, columnHash, tableScript); // Write to Log Trace.WriteLine($"Created Table '{DataSourceB.SourceTable}'"); } else if(existingHash != columnHash) { // Create Table Script var tableScript = getTableScript(keyColumns, columns); // Update the _dsTableMetadata Table DataSourceB.ExecuteNonQuery("UPDATE [dbo].[_dsTableMetadata] SET Hash=@p0, Script=@p1, Updated=getutcdate() WHERE Name=@p2", columnHash, tableScript, DataSourceB.SourceTable); // Drop the existing Table DataSourceB.ExecuteNonQuery($"DROP TABLE {DataSourceB.SourceTable}"); // Create the Target Table DataSourceB.ExecuteNonQuery(tableScript); // Write to Log Trace.WriteLine($"Updated Table '{DataSourceB.SourceTable}'"); } else { // If this Entity has a 'modifiedon' column set the project to an incremental sync. if(schemaA.Map.Any(p => p.ColumnName == "modifiedon")) { // Set the Sync Mode to Incremental SyncMode = SyncModeEnum.SyncAtoBIncremental; // Get Max of 'modifiedon' from current table. var checkpoint = DataSourceB.ExecuteScalar($"SELECT MAX(modifiedon) FROM {DataSourceB.SourceTable}"); // Set the DataSource Filter DataSourceA.FetchXmlFilterExpression = DataSourceA.GetFetchFilterXmlForModifiedSince((DateTime)checkpoint, "gt"); } } // Get the Target Schema var schemaB = DataSourceB.GetDefaultDataSchema(); // Return an Auto-Map return AutoSchemaMap ? schemaA.ToAutoMap(schemaB) : schema; } string getTableScript(string keyColumns, string columns) { return string.Concat( $"CREATE TABLE {DataSourceB.SourceTable} (\r\n", // Append the Columns columns, ")\r\n", // Add the Primary Key $"ALTER TABLE {DataSourceB.SourceTable} ADD CONSTRAINT [PK_dbo_{DataSourceA.Entity}] PRIMARY KEY CLUSTERED ({keyColumns})\r\n"); } bool isValidColumn(DataSchemaItem column) { // Use to Filter out invalid columns. return true; } string getSqlColumnType(DataSchemaItem column) { switch(column.DataType.FullName) { case "System.Guid": { return "uniqueidentifier"; } case "System.Int64": { return "bigint"; } case "System.Int16": case "System.Int32": { return "int"; } case "System.Single": case "System.Double": { return "float"; } case "System.Decimal": { return "decimal(19,4)"; } case "Simego.DataSync.Date": case "System.DateTime": { return "datetime"; } case "System.Boolean": { return "bit"; } case "System.String": { if(column.Length > 0 && column.Length < 4000) { return $"nvarchar({column.Length})"; } if(column.ColumnName.EndsWith("codename")) { return "nvarchar(100)"; } break; } } // Default Column value return "nvarchar(4000)"; } static string getHash(string input) { using (var sha1 = System.Security.Cryptography.SHA1.Create()) { // Convert hash bytes to hex string return string.Concat(sha1.ComputeHash(Encoding.UTF8.GetBytes(input)).Select(b => b.ToString("x2"))); } } }

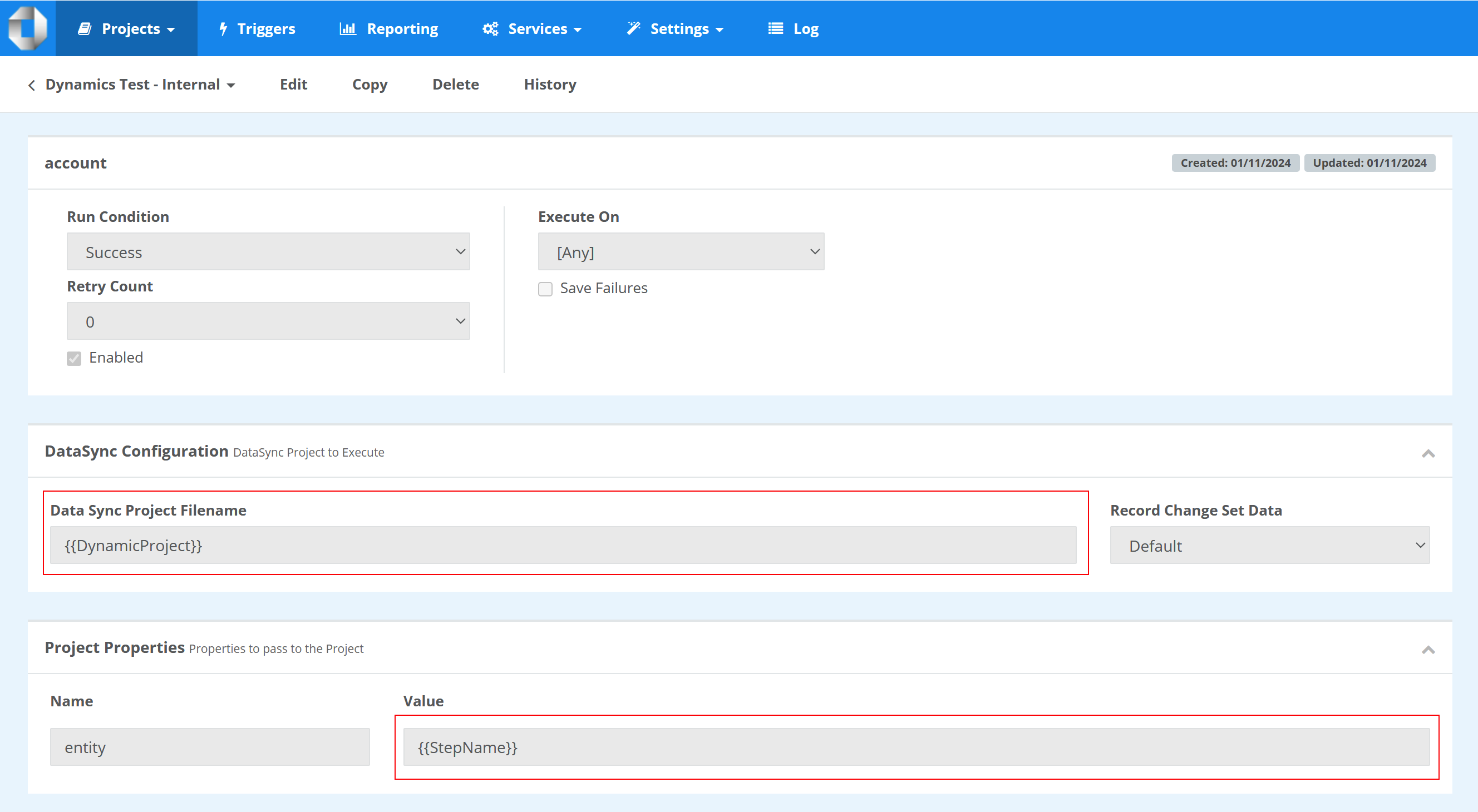

Ouvvi

We can now use a new Ouvvi Step handler to run a Data Sync project from a file and pass the name of the entity as a project property just like the Run Tool Step.

Use the Data Sync Project File step type with a User Setting pointing to the actual file path so it can be easily changed.

To add additional entities to the export you only need to use the Copy Step function and name it the same as the Dynamics entity.

Now you should see that the sync can run through much quicker as those with no changes will be processed quickly.